There is a well-known saying that if you torture the data long enough it will confess to anything. This reflects the practice of p-hacking, which is the repeated reanalysis of data until statistical significance is achieved. Although it has received far less attention, there is a similar practice among people who conduct complex statistical modeling that involves repeated modification and testing of models until good fit is achieved. I refer to this practice as fit-hacking. My advice is to avoid fit-hacking your statistical model because it is likely to lead to erroneous conclusions.

The Problem with P-Hacking

Statistical significance testing in research allows us to draw conclusions based on probabilities. Suppose you conduct a simple experiment, for example, to determine if a training program for sales associates raises their number of sales. You randomly assign half of your associates to be trained and the rest to serve as a control group. If you find that the trained associates on average sell more product, a simple t-test can tell you the probability that the difference is just a coincidence. The standard for concluding the training was effective is p less than .05. That is, less than 5% of the time, we would find the difference we observed by chance.

P-hacking is repeatedly analyzing the same data in different ways until statistical significance is achieved. Let’s say you did not achieve statistical significance with the training example. You can try a variety of hacks, such as eliminating anyone with less than 1 year of service, limiting the analysis to those under 30, or introducing some statistical controls. Joseph Simmons. Leif Nelson, and Uri Simonsohn demonstrated how a few simple manipulations of data can raise the chances of finding erroneous results from 5% to over 60%. And they just scratched the surface in the sorts of p-hacks that can be applied.

Fit Rather Than Statistical Significance with Model Testing

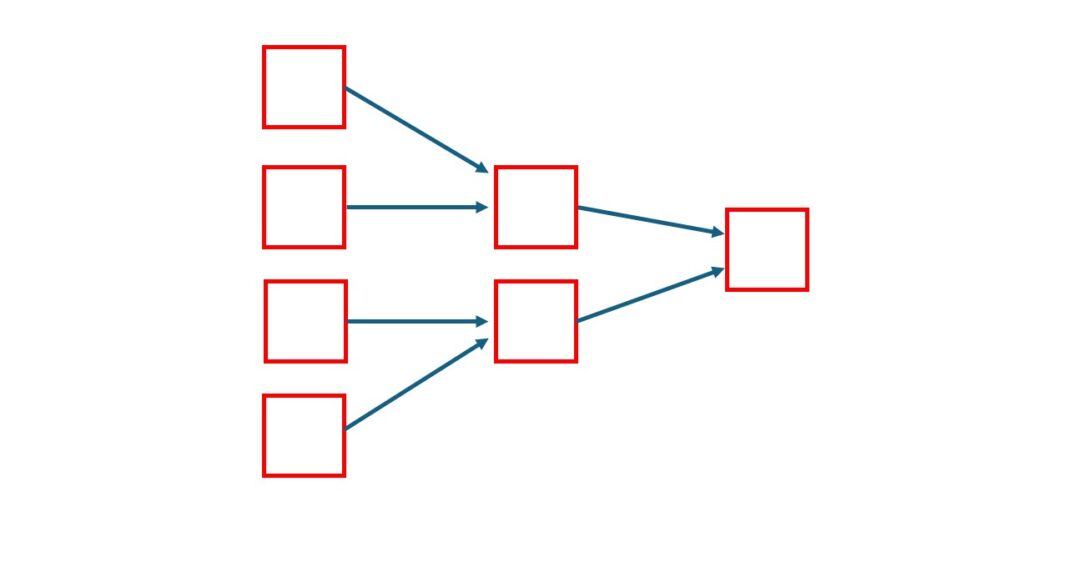

The underlying logic of model testing is different from the more traditional statistical significance testing. Rather than comparing groups and computing the probabilities of finding differences, modeling involves three steps.

- Create a theoretical model that produces a pattern of relationships among several variables

- Collect data on those variables

- Compute fit statistics that indicate how well the observed relationships match the theoretical model. For example, a model predicts what the correlations among several variables should be. A fit statistic would indicate how well the correlations from our data match the predictions from the model.

The foundation of modeling is deductive logic that if the model is correct we should find good fit when we collect data to test it. This is an ‘If A then B’ argument. However, finding good fit doesn’t guarantee the opposite. “If A then B” doesn’t imply “If B therefore A”. Under the best of circumstances, finding good fit merely means that the model might be correct, but so are many other models that would produce the same fit.

Avoid Fit-Hacking Your Statistical Model

It is common practice with complex statistical modeling to modify models if good fit is not achieved. This might involve adding or deleting variables, adding or deleting the connections among variables, or setting the values of certain parts of the model to a particular value. As with p-hacking, if you do enough manipulating you can find a model that yields good fit. However, that fit is likely based purely on coincidence that in this particular data set, this exact pattern of results was found. Repeat the study and it is likely that the fit will not be good. Thus fit-hacking leads to an erroneous conclusion about model fit.

The best way to conduct complex modeling is to base the model on a single theory for which there exists sound evidence. Use that theory to derive a model that explains a particular phenomenon. For example, self-determination theory notes that there are three basic human needs, and explains how fulfillment of needs leads to motivation. That theory could be used to create a model of how certain conditions at work might fulfill needs that lead to motivation and to particular behaviors. Finding good fit for such a model that is based on a theory for which there is lots of support can be taken seriously because the model test doesn’t have to stand on its own weak logical foundation. It is bolstered by all the evidence in support of the theory that formed the basis of the model.

As with p-hacking, too often researchers are not completely transparent about their fit-hacking. Sometimes it is because they chose only to report the final model, and sometimes it is because editors ask them to remove preliminary steps to save space. It is a poor practice to only report the final model when preliminary models were tested–the entire process of model testing should be provided. That said, you should avoid fit-hacking your statistical model in the first place, and spend more effort in developing the model based on sound theory than in fit-hacking it.

Image created with PowerPoint

SUBSCRIBE TO PAUL’S BLOG: Enter your e-mail and click SUBSCRIBE